Prompt Hacking and Misuse of LLMs

Large Language Models can craft poetry, answer queries, and even write code. Yet, with immense power comes inherent risks. The same prompts that enable LLMs to engage in meaningful dialogue can be manipulated with malicious intent. Hacking, misuse, and a lack of comprehensive security protocols can turn these marvels of technology into tools of deception.

Harnessing the Dual LLM Pattern for Prompt Security with MindsDB - DEV Community

Exploring Prompt Injection Attacks, NCC Group Research Blog

Jailbreaking Large Language Models: Techniques, Examples, Prevention Methods

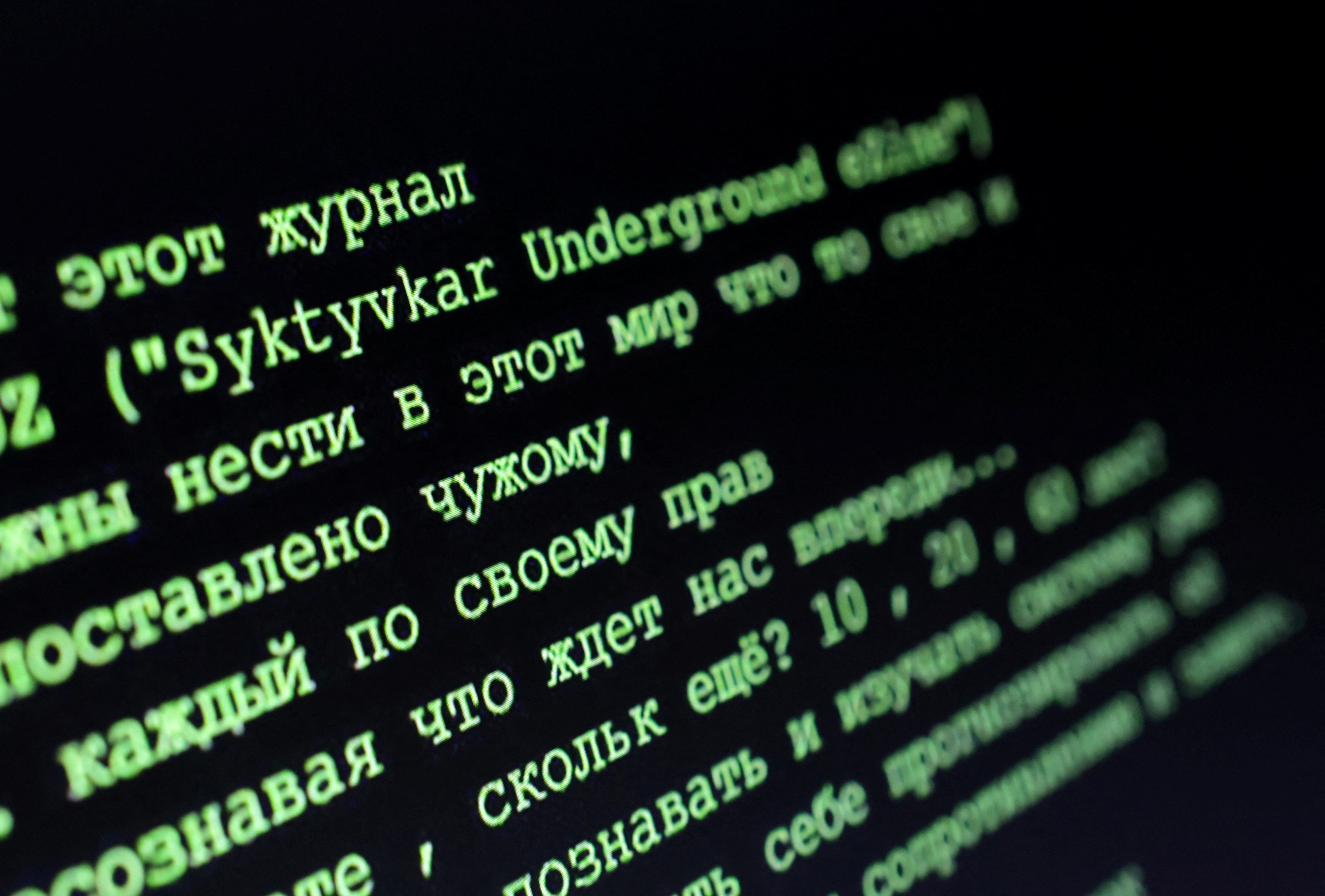

Cybercrime and Privacy Threats of Large Language Models

The Dangers of AI-Enhanced Hacking Techniques

Fortifying AI Integrity: Strategies Against the Malicious Use of Language Models - Cyberator GRC

🟢 Jailbreaking Learn Prompting: Your Guide to Communicating with AI

LLM meets Malware: Starting the Era of Autonomous Threat

/2023/08/13/1691964823528.gif)

Hackers explore ways to misuse AI in major security test

ChatGPT and LLMs — A Cyber Lens, CIOSEA News, ETCIO SEA

What is Prompt Hacking and its Growing Importance in AI Security

arxiv-sanity

Prompt Hacking and Misuse of LLMs

Best practices to overcome the risks of large language models

7 methods to secure LLM apps from prompt injections and jailbreaks [Guest]